Twin Mirror

An interactive installation integrating custom software and hardware including an autoencoder ML model designed and trained using the Keras Deep Learning library for Python. The installation was our contribution to the first Seoul Biennale of Architecture and Urbanism in Seoul, South Korea. The goal of the project was to create a public experience that showed people not only the power of emerging technologies such as facial recognition and machinelearning, but also of some of the limitations and even dangers of those technologies.

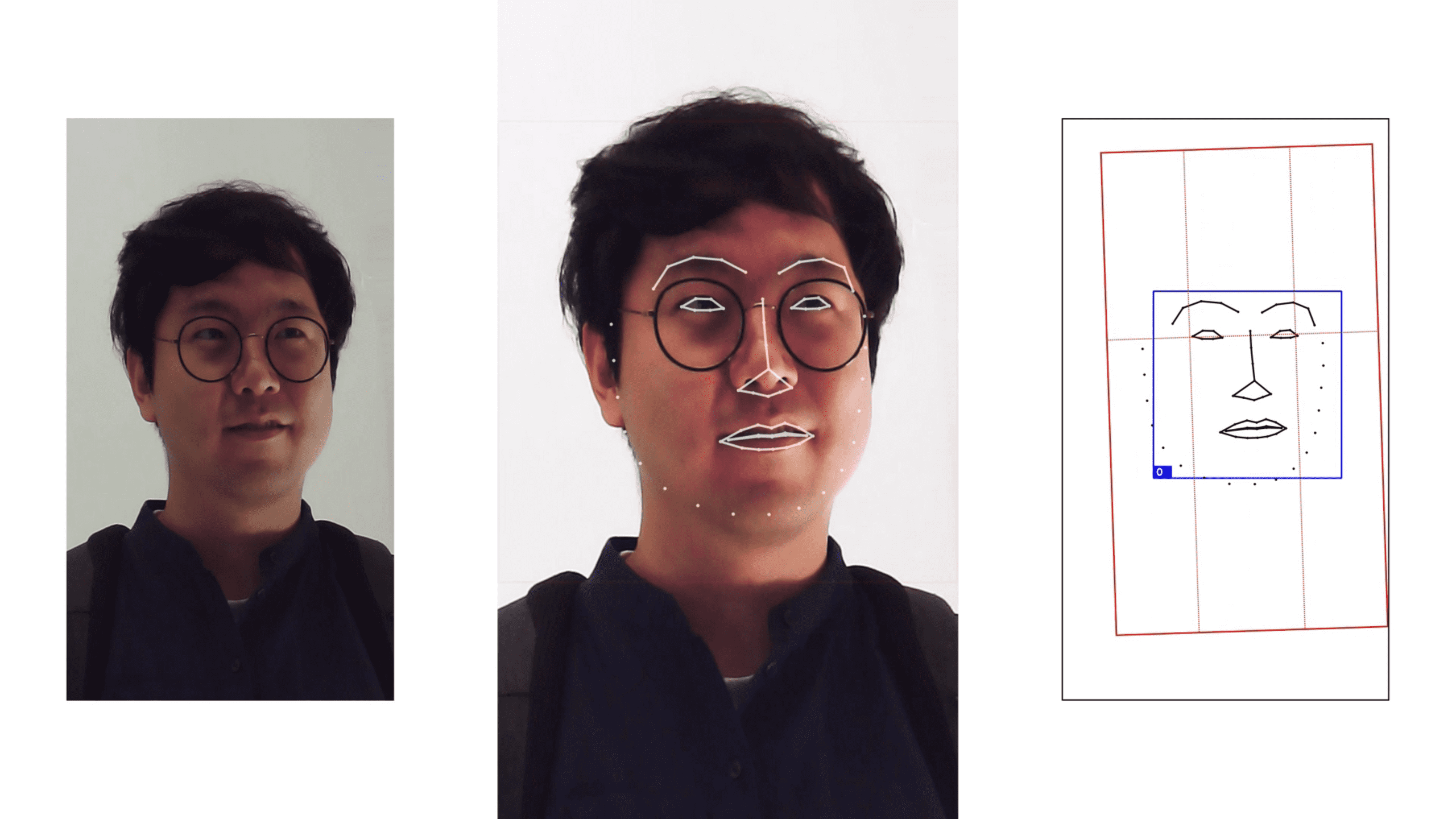

The installation is composed of a camera and two digital displays. When visitors walk in front of the camera, the system finds their face, and then superimposes a different processed version of their face in real time on the two displays. The two faces are created by a Machine Learning algorithm which has been trained on different sets of data.

Latent space of autoencoder model trained during event

One is trained on a standard data set which is actually used by researchers in facial recognition. It contains faces of famous people, which tends to skew towards white older males. The other one is trained live during the event using the faces of actual visitors.

Live facial recognition and rectifier algorithm for prediction and data generation

The data on which these systems are trained gives them an inherent bias. Although we designed the installation to make this bias super obvious, the same problem is inherent in any algorithm which gets its intelligence from being trained on data. While we may think that an algorithm would give us a rational unbiased view of a situation, it is actually inheriting all the assumptions and biases of their human designers.

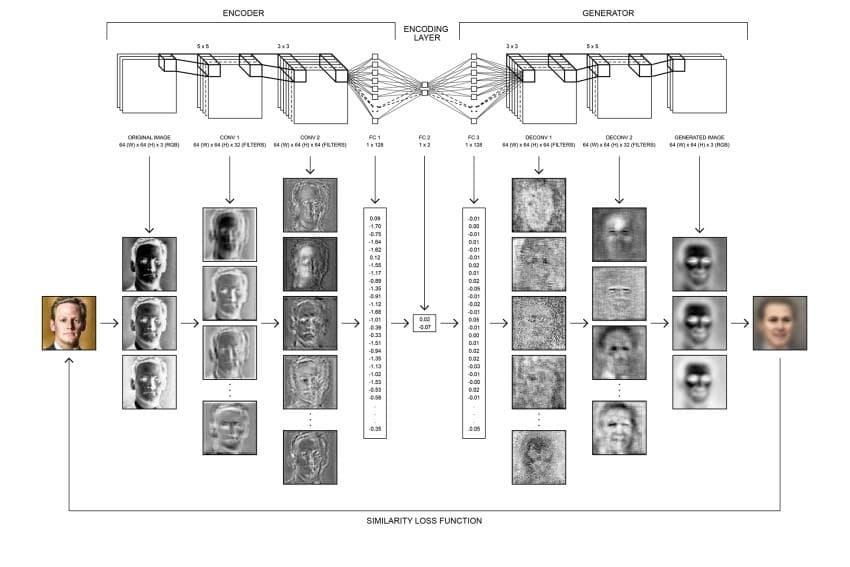

Diagram of encoder and decoder network architecture based on convolutional neural networks (CNN)

As these kinds of predictive algorithms play a larger role in our daily lives, we will have to navigate the tradeoff between the convenience they offer and the potential dangers to our privacy and freedom of choice. While many people fear the potential of artificial intelligence to take over the world, I think a much more realistic fear is what happens when people (through laziness or incompetence) start to outsource their decision making to algorithms which are on the surface objective, yet may conceal deep hidden biases.